The low p-values for your baselines propose that the primary difference in the forecast accuracy from the Decompose & Conquer model and that with the baselines is statistically sizeable. The results highlighted the predominance from the Decompose & Conquer model, especially when when compared with the Autoformer and Informer styles, where the difference in overall performance was most pronounced. On this set of tests, the importance stage ( α

We can even explicitly set the windows, seasonal_deg, and iterate parameter explicitly. We can get a even worse suit but this is just an example of ways to pass these parameters on the MSTL course.

The achievement of Transformer-based mostly models [twenty] in a variety of AI duties, like natural language processing and computer vision, has resulted in greater curiosity in applying these tactics to time series forecasting. This good results is largely attributed for the power from the multi-head self-focus mechanism. The standard Transformer product, having said that, has sure shortcomings when placed on the LTSF problem, notably the quadratic time/memory complexity inherent in the initial self-consideration style and design and error accumulation from its autoregressive decoder.

Home windows - The lengths of each and every seasonal smoother with respect to each more info interval. If these are typically significant then the seasonal component will display considerably less variability as time passes. Must be odd. If None a set of default values determined by experiments in the initial paper [one] are utilized.

Jaleel White Then & Now!

Jaleel White Then & Now! Richard "Little Hercules" Sandrak Then & Now!

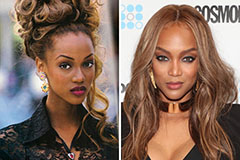

Richard "Little Hercules" Sandrak Then & Now! Tyra Banks Then & Now!

Tyra Banks Then & Now! Pierce Brosnan Then & Now!

Pierce Brosnan Then & Now! Terry Farrell Then & Now!

Terry Farrell Then & Now!